By: Dr. Ajish K. Abraham

Professor of Electronics and Acoustics Head, Dept. of Electronics

All India Institute of Speech and Hearing (AIISH)We humans are bestowed with five senses: sight, hearing, taste, smell, and touch. What we feel or experience, we express or communicate. But not everyone is fortunate enough to communicate, and it takes a lot of effort to serve people suffering from communication disorders.

The All India Institute of Speech and Hearing (AIISH), a national institute under the Ministry of Health and Family Welfare, Govt. of India, is an institution that has been relentlessly working towards the betterment of lives for more than 52 years. During this time, AIISH has established itself as a world-class institute for human resource development, conducting need-based research, striving for excellence in clinical services, and creating awareness and public education in the field of communication disorders.

From April 2016 to March 2017, the institute assessed 70,000 people for communication disorders. Recognized as a center of excellence in deafness by the World Health Organization (WHO), the institute includes speech, language, hearing sciences, and disorders as its main areas of research.

Early identification of communication disorders

The communication of a newborn begins at birth through interacting with the mother. Infants receive inputs for language development through child-parent interactions, which require a perfect speech production system and a normal hearing mechanism.

Articulation conveys meanings, thoughts, ideas, concepts, and attitudes through sounds, words, phrases, and sentences and forms the most important activity in speech production. Phonology refers to the adjustments made in the speech production mechanism that result in different speech sounds.

Defects in these processes lead to articulatory and phonologic disorders and are treatable if they are identified at an early stage. Infant screening is an important activity at AIISH, where infants at high risk for communication disorders are identified. 55,000 newborns were screened by clinicians at AIISH between April 2016 and March 2017.

The otoacoustic emission (OAE) test has been in use to identify infants with hearing disorders, whereas no such objective tests were previously available to identify those with speech disorders.

I-Cry – A Milestone For Infant Screening

BK CONNECT ACOUSTIC CAMERA

A team of researchers led by Dr. N. Sreedevi at AIISH recently developed a tool for infant cry analysis that has the potential to be used as a screening tool to identify infants with a risk of speech disorders. A cry is an infant´s first verbal communication and is a product of the respiratory and phonatory systems.

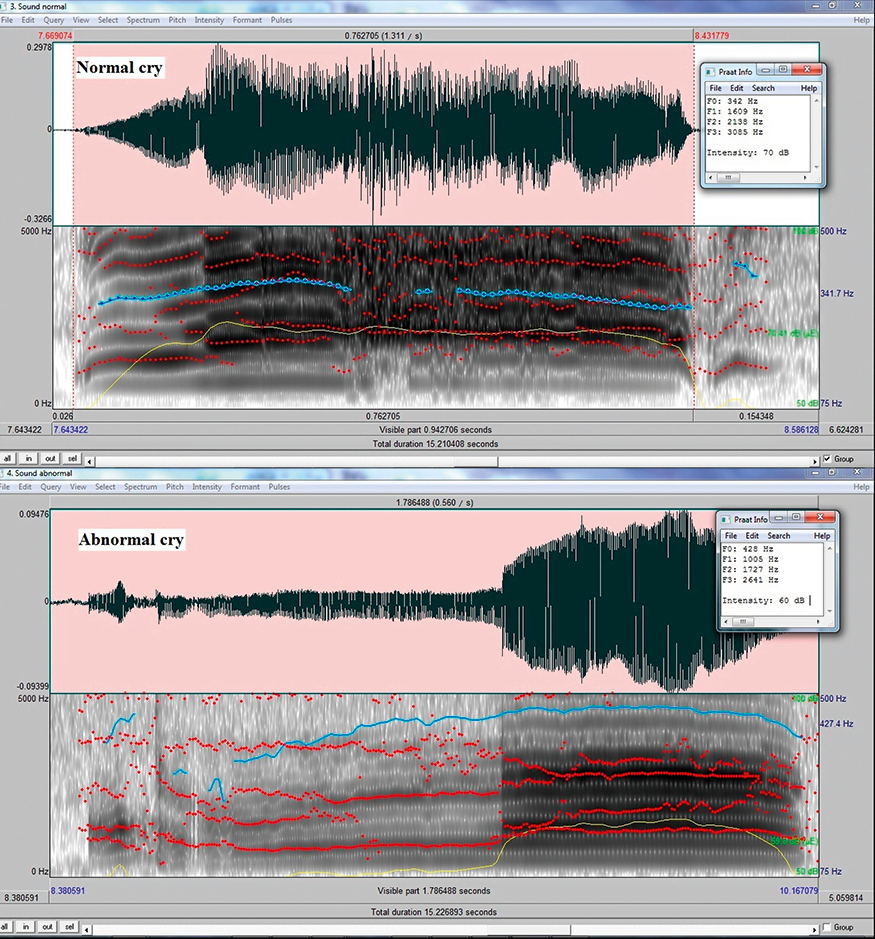

The tool developed at AIISH, called i-cry, records and measures the cries of healthy and high-risk newborns. The recording is done using an Olympus LS-100 Multi-track Linear PCM Recorder at 48 kS/s, positioned in front of each newborn with an external directional microphone placed 10 cm away from the newborn’s mouth. Fifteen seconds of the recorded cries are measured on twenty acoustic parameters including duration of the cry, fundamental and formant frequencies, noise-related parameters, number of pulses, number of periods, number of voice breaks, and degree of voice breaks.

Using PULSE Reflex™ analysis software, the values of the parameters are estimated from the time domain waveform and spectrogram of the recorded cries. The values obtained are then compared with the normative values and the cry of high-risk newborns are identified. The aim of the screening tool is to aid in early detection of any pathological conditions the infant is susceptible to and to facilitate further investigations leading to early rehabilitation of such high-risk infants.Comparison of normal and abnormal cries using PULSE Reflex™ analysis software

Helping children with cleft lip and palate

One in 700 children born in the world has a cleft lip and one in 2,000 children has a cleft palate. The speech of people with cleft lip and palate (CLP) is often unintelligible and they often face difficulty in communicating with others. Surgery along with several sessions of speech and language therapy helps them to overcome this problem to a large extent.

However, it is a time-consuming process and improvement in intelligibility is measured subjectively by speech-language pathologists. There is a lack of experts in this field and this leads to difficulty in appropriately assessing, at adequate intervals, the rehabilitation of people with CLP, even those who have undergone early surgical intervention.

The assessment of speech in people with CLP typically involves using perceptual, acoustic, and aerodynamic methods and estimating the correlation among these. This approach is either manual or semi-automatic and is, therefore, time-consuming.

Nasospeech, another research activity being conducted by a team led by myself at AIISH, in collaboration with the team led by Prof. S.R.M. Prasanna at the Indian Institute of Technology, Guwahati, is a solution to this issue. Nasospeech is a computer-assisted diagnostic system for assessing the severity of the speech disorder. Speech samples of the person with CLP are acquired by the computer through its microphone. Nasospeech software analyzes these samples and derives various acoustic and aerodynamic parameters. The values of these parameters are compared with the values of normal speech, and severity is graded based on the extent of the deviation.

The scope of the Nasospeech project includes developing a method to automate the assessment process, which would result in a faster and more accurate assessment. This will help professionals involved in the assessment and rehabilitation of people with CLP, such as speech pathologists, plastic surgeons, and maxillofacial surgeons.

This will also help people with CLP, as well as their caregivers, to take advantage of fast and efficient services that will further enhance their quality of life. According to Dr. M. Pushpavathi, an experienced speech-language pathologist, the results at the end of the first phase look very encouraging.

Correction of Misarticulated Sound

Articulation disorders are commonly found in children with hearing impairment, cerebral palsy, cognitive impairment, CLP, and in adults as an after-effect of stroke and accidents. Articulation therapy is a systematic procedure in which a misarticulated sound is corrected at various levels by speech-language pathologists. However, there is a lack of trained rehabilitation professionals leading to a scarcity of rehabilitation services.

Speech samples of the person with articulation disorders are acquired by the computer through its microphone. Then recognition of disordered speech is done using automatic speech recognition. Suitable features are extracted from the normal as well as disordered speech.

Dynamic time warping (DTW) matching is then used to match phonemes in normal speech with corresponding phonemes in a disordered speech to find a measure of similarity.

The collaborative research team, led by Prof S.R.M. Prasanna of the Indian Institute of Technology, Guwahati, and AIISH, visualizes that Articulate+ will help speech pathologists, special educators, and caregivers of people with communication disorders. Using Articulate+, people with articulation disorders could take advantage of fast and efficient rehabilitation services, which will further enhance their quality of life.A state-of-the-art acoustics laboratory equipped with Brüel & Kjær´s PULSE™ multi-analysis system, electracoustic test system, head and torso simulator (HATS), artificial ear and mastoid and hand-held analyzers with sound recording software provides the major infrastructure to support the research activities of Dr Abraham.

Communication and quality of life

As humans, our ability to communicate is essential to our quality of life – no matter who we are or where we come from. Particularly in rural India, communication disorders are very prevalent. Although there are many speech professionals working to help people with communication disorders, there is generally a lack of acoustic research and clinical tools to do this optimally.

AIISH is working hard to change this with applied research that impacts the hearts and lives of people with communication disorders. From identifying communication disorders in newborns to helping assess and rehabilitate people suffering from CLP, and helping rehabilitate children with articulation disorders, the tools from Brüel & Kjær have proven essential to this endeavor.

The team at AIISH will continuously explore these tools as they work towards their goal of ‘promoting quality of life for people with communication disorders.Dr Ajish K Abraham, is a professor of electronics and acoustics at AIISH who specializes in acoustic analysis of defective speech, and electroacoustics. As head of the Dept. of Electronics, it is his mandate to develop automated tools and processes that will result in early identification of people with communication disorders and their effective rehabilitation.

Visit the website: All India Institute of Speech & Hearing (AIISH)

Abonnieren Sie unseren Newsletter zum Thema Schall und Schwingung